Meditation Buddy #

Summary #

Meditation Buddy was my thesis for Tsinghua University. I was the creator of the project and saw it through from initial conception to launching and testing with 20 different participants to see the effectiveness of the app.

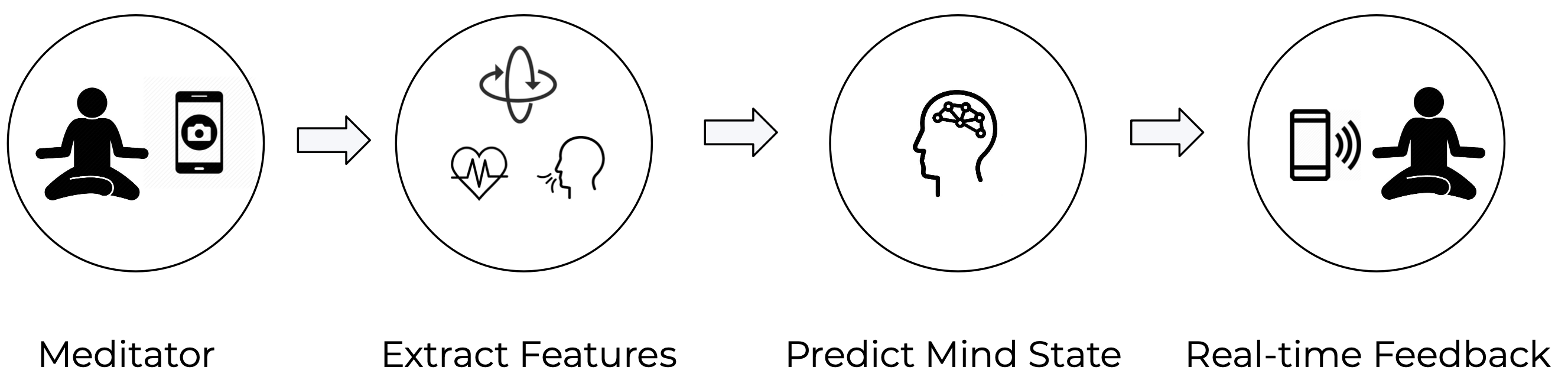

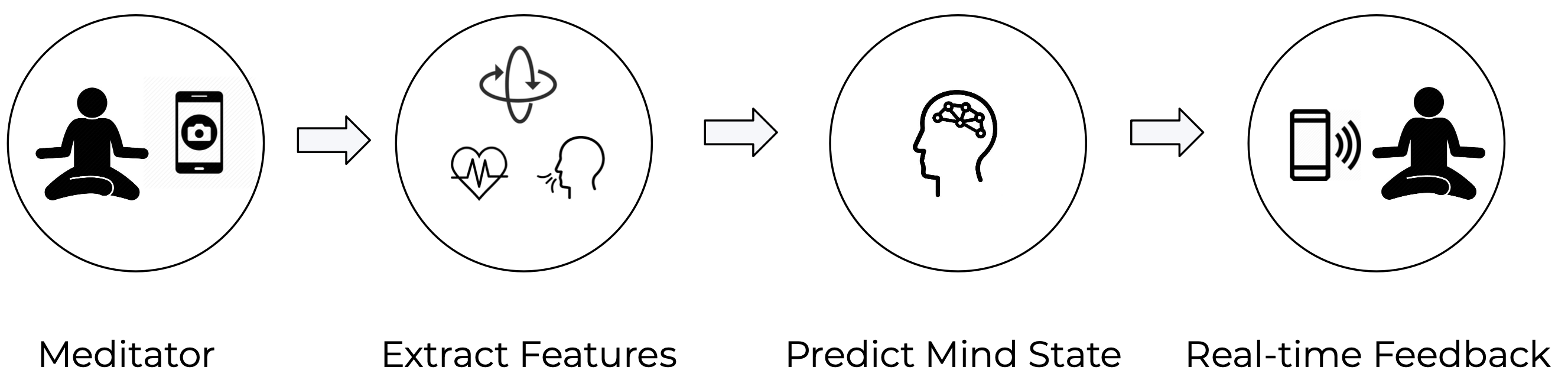

Meditation Buddy is a meditation app that uses the smartphone camera to assess the user’s body position of the upper torso and head. Based on their body position for the last 6 seconds, an inference is made of whether or not they are meditating. If they deemed “distacted” for 21 seconds, then a gong sound would play to redirect their attention. Meditations would last 10 minutes.

I really enjoyed this project, as I was able to combine some things I learned in yoga with technology. I’ve been a yoga practitioner for over 10 years. So it was really exciting for me to take what I learned about computer vision, data science, and development and combine it with meditiation - one of yoga’s tools.

Problem Space #

Meditation is a common practice method that can effectively help users combat psychological stress. One key challenge for new meditators is to regain mindfulness when their minds start wandering.

The Monkey Mind #

A common metaphor within the meditation world is that our minds are like monkeys, swinging from thought to thought[5]. Through meditation, we can stop the monkey swinging from the next thought, instead focusing on a single thought while avoiding the distractions that come up. Through continued practice we will be able to better tame the monkey that is our mind.

While there are many different types of meditation, almost all of them involve focusing the mind on an object of focus, like a sensation in the body, a sound, or thought. In these meditations, the act of med- itation is when the meditator notices their mind is wandering (or that the monkey mind has swung to another branch), and then brings their attention back to the object of focus. Experienced meditators are usually better at noticing when their mind wanders than new meditators [6]

How to Stop that Monkey #

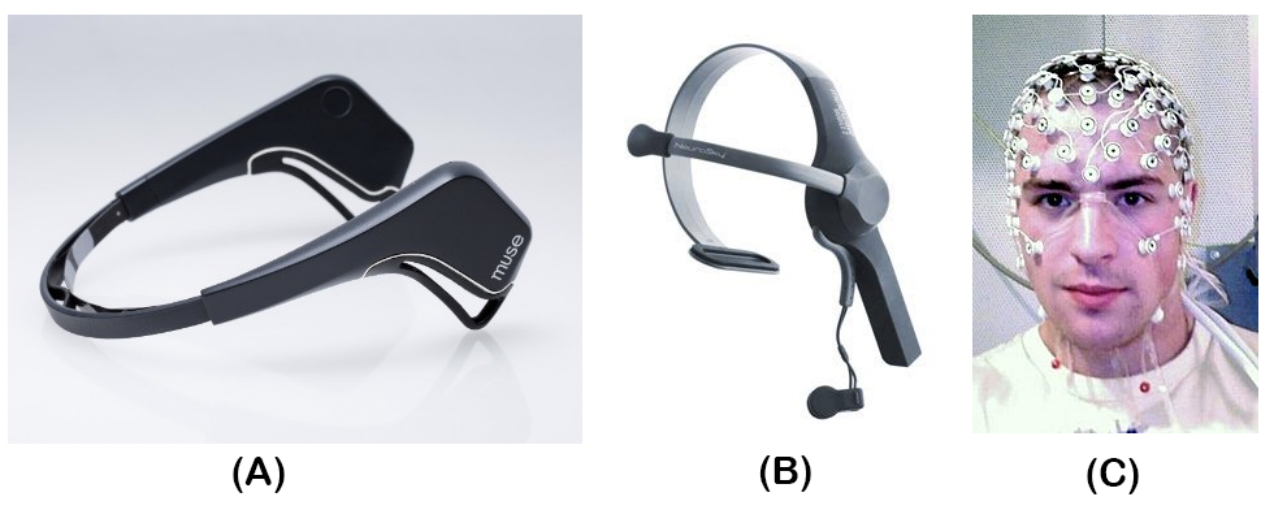

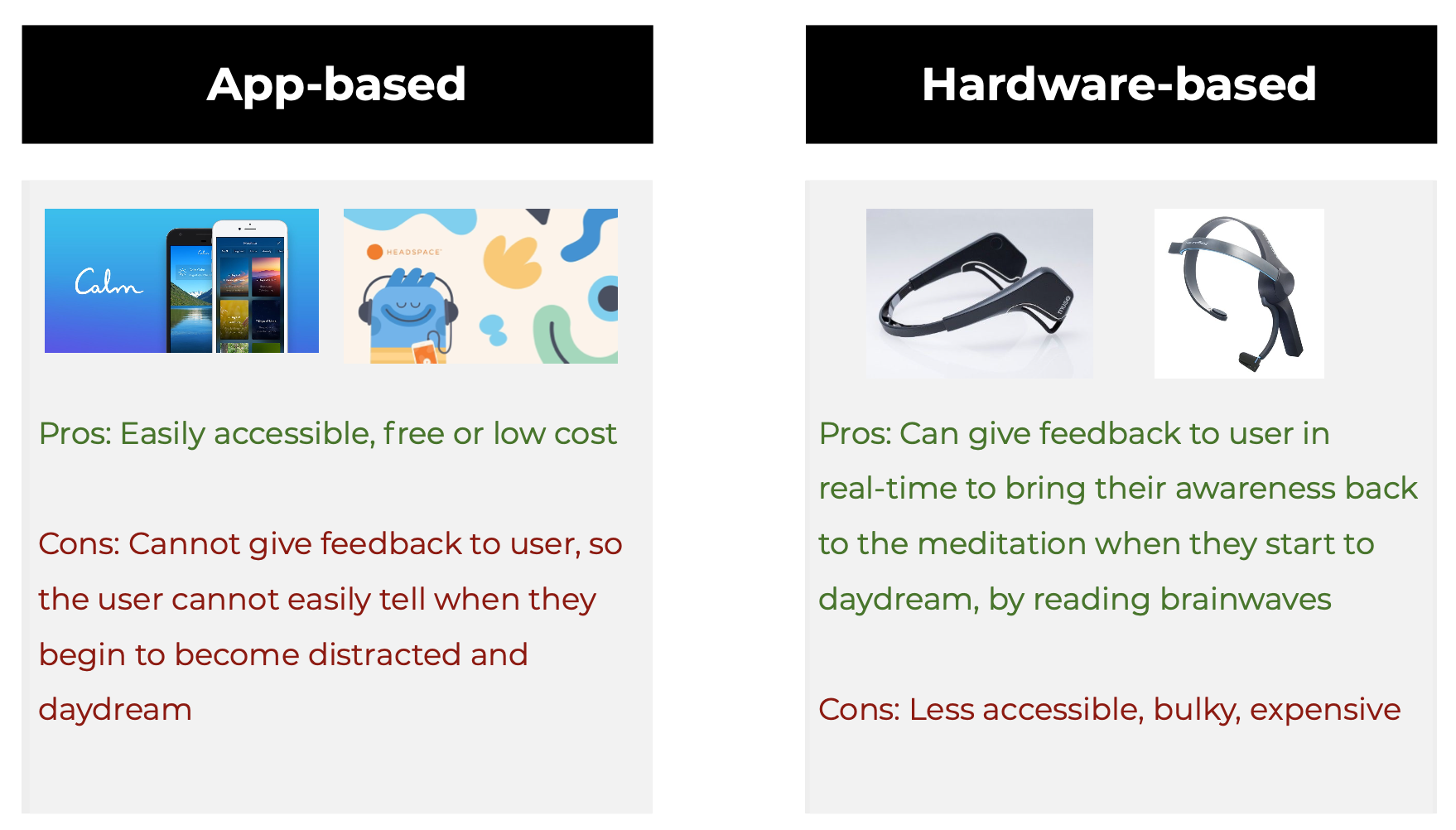

For new meditators, it is often a challenge[7]. Distractions, questions about the technique, and understanding whether or not they are doing it correctly are all challenges new meditators face. There are also a variety of methods that meditators try when attempting meditation early on in their meditation career. This includes in-person with a meditation instructor, through an online app that guides them through a meditation, and brain-computer interface (BCI) devices such as the Muse or Neurosky headsets, which measure EEG waves to determine the state of the brain.

Of these methods, only the instructor and BCI methods allow for feedback and clarifying of technique to know they are doing it correctly. However, these methods require either inordinate time or a significant amount of money to achieve. The app method, however, is very accessible. This has some drawbacks, however, in that they cannot get feedback to know if they are doing the technique correctly.

Proposed Solution #

A non-contact method for determining meditation level and giving feedback is proposed. In this solution, no BCI devices are needed in order to detect the meditation level, allowing for the accessibility of an online app while providing the advantages of a BCI device. Instead, a video stream is processed to determine the relevant keypoints of the body, which is then sent to a machine learning model specifically built for the task of determining brain-states based on the relevant keypoints.

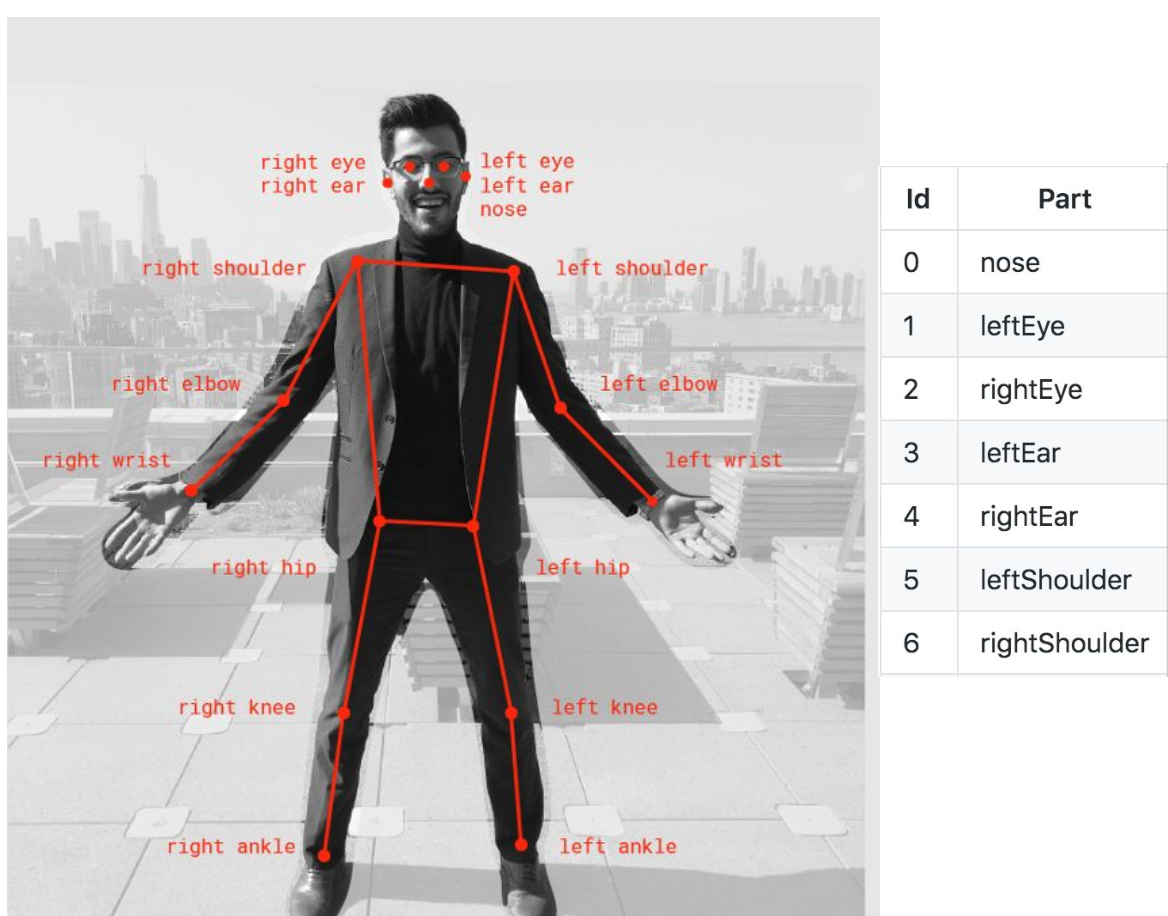

Posenet and Finding Keypoints #

Posenet[34][35] is a machine learning model that takes in a video or image and give a 2-D pose estimation of any people within the frame. It has been further improved upon by the Google Brain team, and integrated into TensorFlow.js library[36].

Posenet has been utilized in a number of research areas, like improving the swing for golfers[37] and recognizing human activity[38]. Posenet is chosen for this research because of the reliability and for the speed at which it can make predictions about the human body within the image. Additionally, with the integration into the TensorFlow.js library, it is a good candidate to get working on a smartphone as the documentation and support for TensorFlow.js is reliable.

Data Collection #

In order to make prediction of the user’s brain state based on video data, I needed to collect many different users meditating in front of a camera while wearing the Muse headset. The Muse headset allowed me to gather a “golden truth” of the meditation status of the users.

12 participants with varying degrees of meditation experience were recruited (5 male and 7 female). 10 of the participants had little to no experience with meditation.

Brainwaves and Relative Alpha #

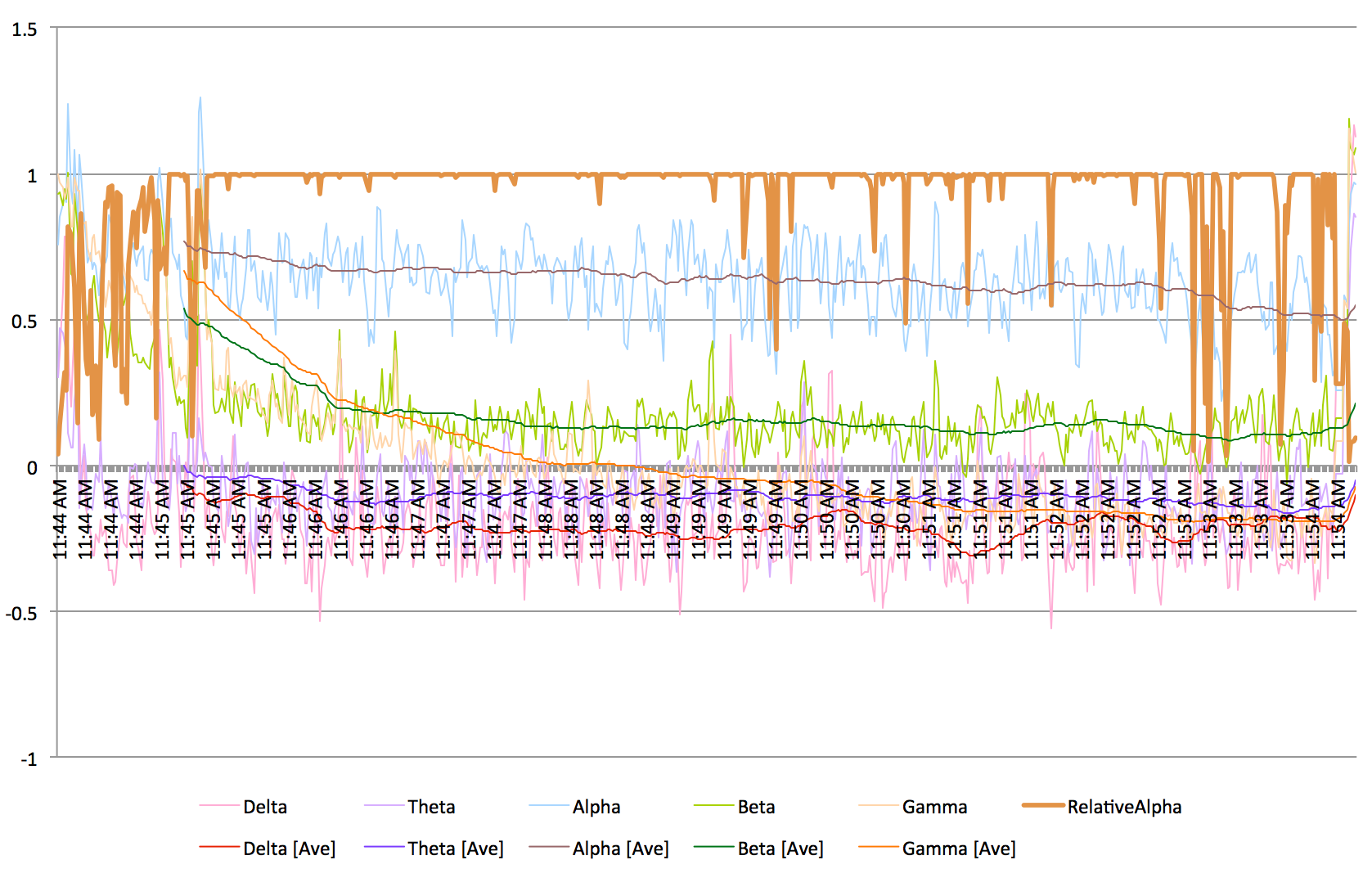

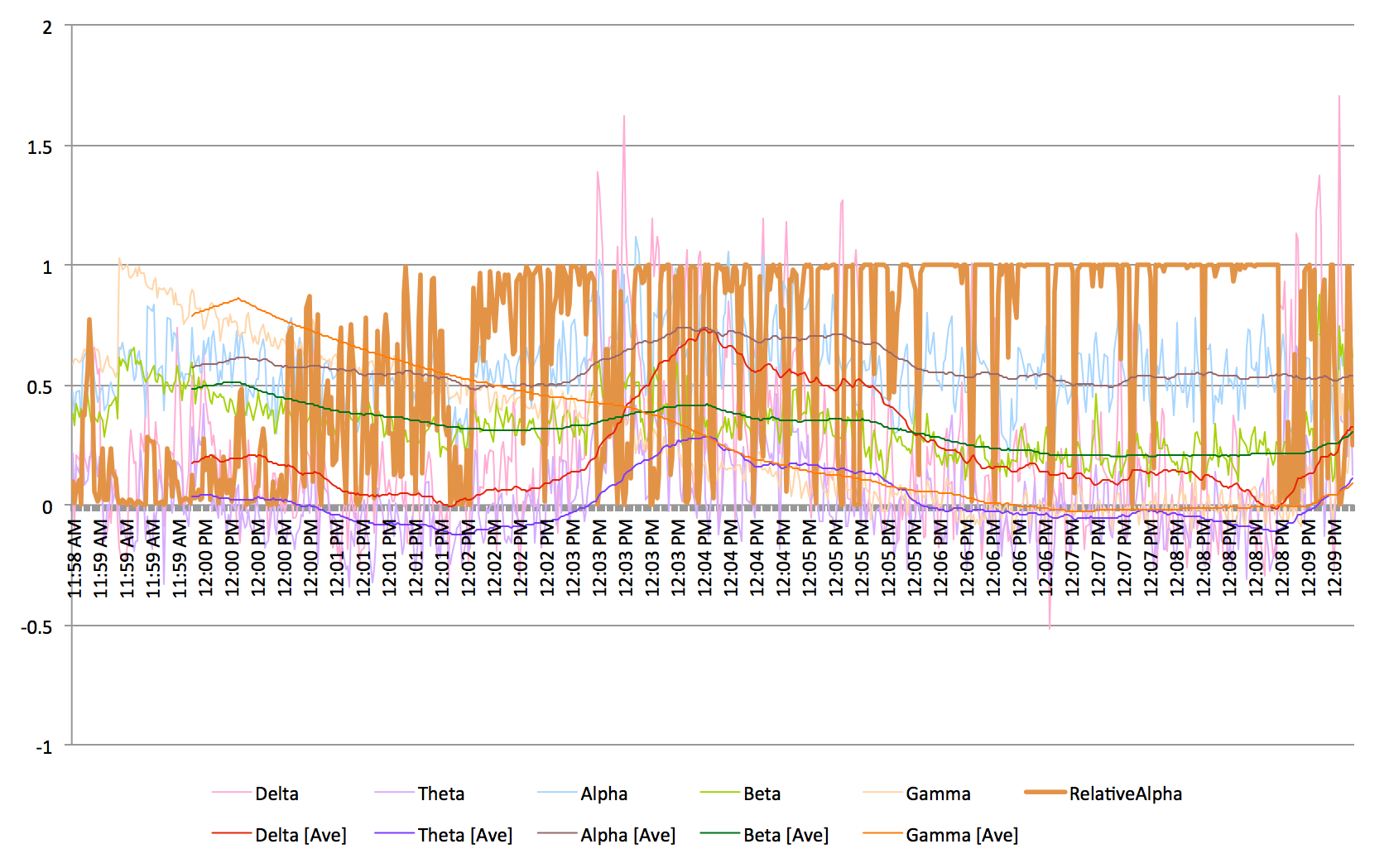

Different patterns of brainwaves can be recognized by their amplitudes and frequencies. Brainwaves can then be categorized based on their level of activity or frequency.

At any given time, our brain is giving off all of these waves. Many studies have looked into the ratio and strength of the waves while meditating. Numerous studies[19][28][29][30][31][32] have noted that there is a higher relative alpha (sometimes called alpha power) while in a state of meditation. This means that For instance, a re- view of the studies involving EEG and meditation by Cahn et al. found that ”Alpha power increases are often observed when meditators are evaluated during meditating compared with control conditions.”[28]

In order to get a more accurate picture of when people were meditating, a function to find the relative Alpha waves throughout the meditation was identified and used. This formula is used by the Muse headset creators[39] to find the power of the Alpha waves compared to the other waves in the brain.

Relative Alpha = (10𝛼 )/(10𝛼 + 10𝛽 + 10𝛿 + 10𝛾 + 10𝜃 )

This formula outputs a value between 0 and 1, when it is closer to 1 the Alpha waves are most dominant and the user can be inferred to be in a meditative state.

At this point, with the graphed data showing the brainwaves over time and the relative alpha of those brainwaves, the next step was to go to the video and clip the different moments when the user was in high and low relative alpha, in order to create clips of meditation and distraction, respectively.

Labeling Procedure #

- Identify areas in the graph that showed either focus or distraction through relative alpha power at 98% or above.

- Find the corresponding time in the video.

- Cut a series of new 6-second video clips of the participant during this time, 3 seconds apart.

- Save the data in the corresponding distracted or focused folder.

Each video is cut into 6 second clips every 3 seconds, to create overlapping windows of video data. The aim of this overlapping window technique is to create more data for the machine learning algorithm later, as using discrete clips with no overlap produced less data than originally hoped for. 6 second clips were chosen because if the clips were too short, then we wouldn’t be able to get an accurate idea of the user state. If the clips were too long, then we wouldn’t be able to give feedback quickly enough to the user.

Using this formula, the clips for focused meditation were taken when there was a clear streak of high alpha (relative alpha at or above 98%), and the distracted clips were taken when there were clear drops in relative alpha or streak with relative alpha below 80%. In total, 149 focused meditation clips and 142 distracted clips were obtained.

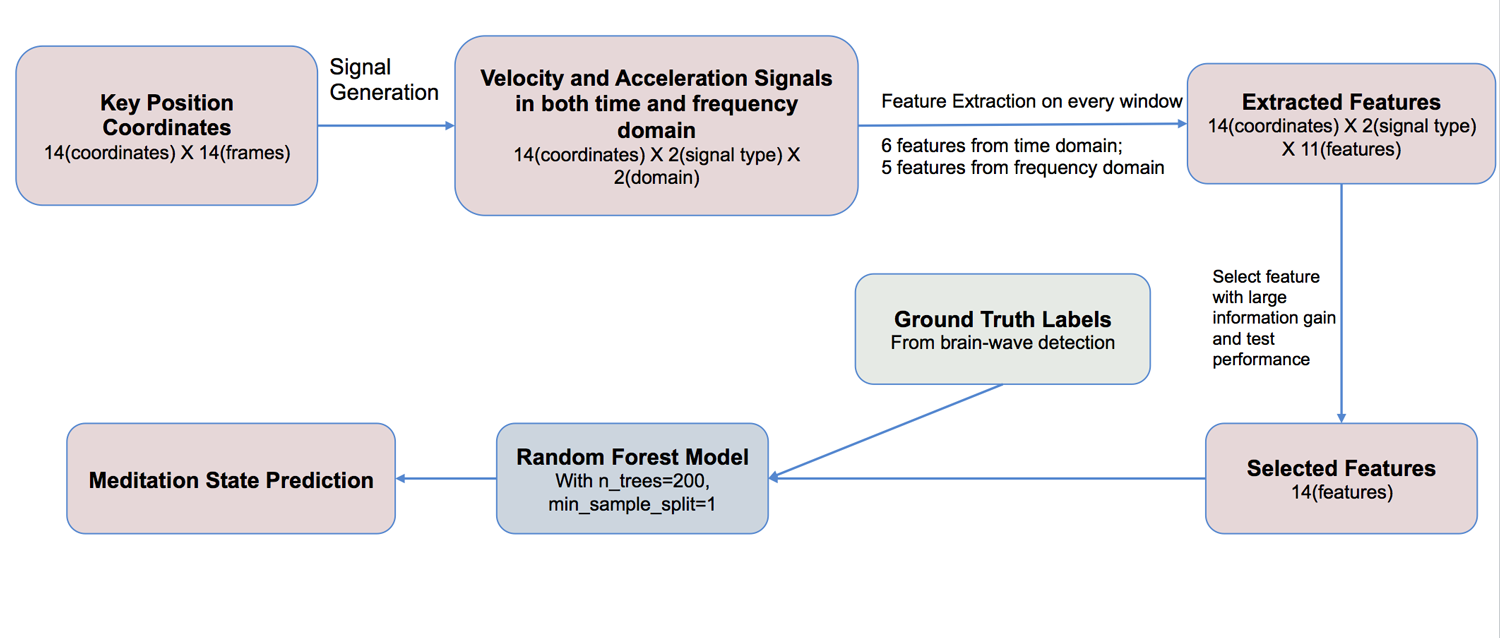

Building the Model #

Evaluating the Model #

Building the App #

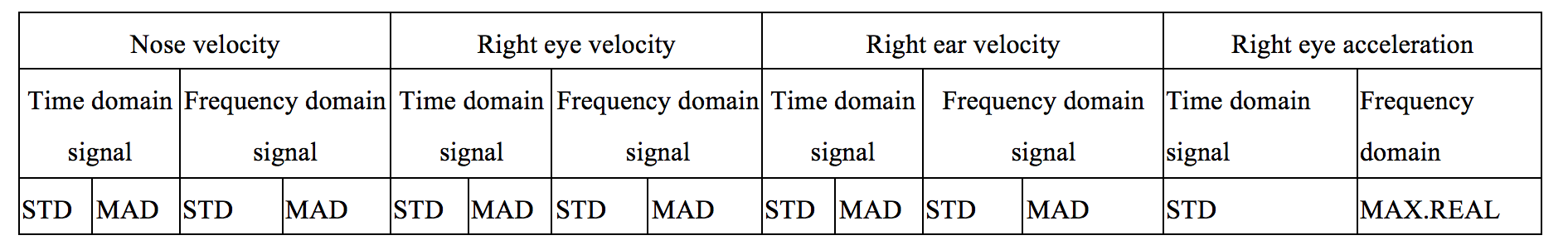

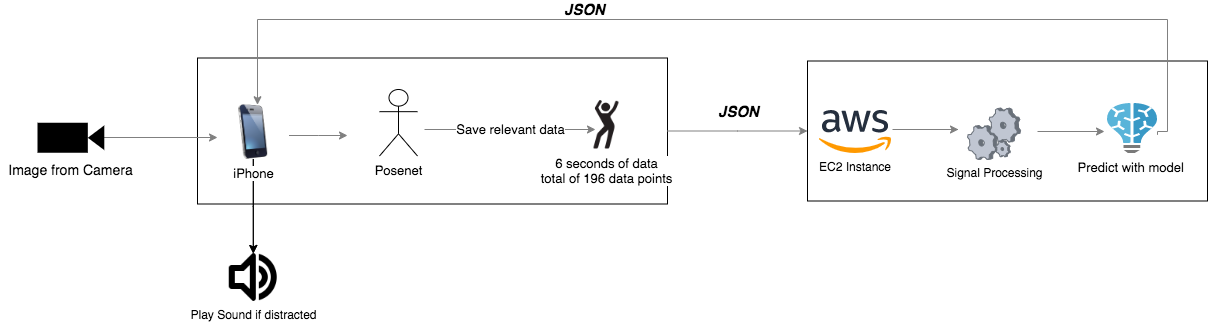

I used Expo.io to build the app in React Native language, an extension of Javascript that can be used on web, Android, or iOS platforms. The app utilizes Tensorflow and Posenet to gather coordinates of relevant body positions. The app collects 196 points of data, roughly 2.3 frames per second for six seconds, and sends it through JSON to an Amazon EC2 instance running the machine learning model. The model then extracts the features and makes predictions, and the server sends the prediction back to the phone running the app.

After the phone receives the prediction, additional logic determines whether or not to play a feedback sound. This includes checking if the session requires feedback, whether the sound has been played in the past 20 seconds, and requiring three consecutive predictions of distraction before playing the sound. The app utilizes a singing bowl sound that starts strong and fades into the background, as studies have shown it has a calming effect.

Once the seven minutes of meditation is over, the app saves the set of predictions, the time of the prediction, and the times the feedback sound was played to the server. This data is used for further analysis in the results section to compare the percentage of focused versus distracted predictions to the Muse “ground truth” data. The Muse data is also saved, including the Alpha, Beta, Delta, Theta, and Gamma waves at a rate of 1hz. An Excel macro is run to analyze the relative alpha function throughout the session duration.

User Tests #

Overall, the app collects data, processes it through machine learning, and provides feedback to help users improve their meditation practice. With everything in place, it was time to start testing it out.